Graph Matching Benchmark

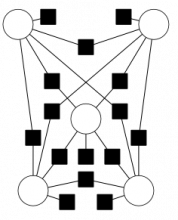

We evaluate a range of existing open-source graph matching algorithms. Our study compares the performance of these algorithms on a diverse set of computer vision problems. The focus of the evaluation lies on both, speed and objective value of the solution.

We collected 11 datasets from applications in computer vision and bio-imaging for evaluation of graph matching algorithms.

In summary these datasets contain 451 problem instances. We modified costs in several datasets to make them amenable to some algorithms, see the supplementary material in Our Paper „A Comparative Study of Graph Matching Algorithms in Computer Vision“. This modification results in a constant shift of the objective value for each feasible assignment, and, therefore, does not influence the quality of the solution.

The Graph Matching Benchmark Suite is hosted at https://vislearn.github.io/gmbench/datasets/