Deep Neural Networks have achieved remarkable progress in data analysis in a broad range of topics such as object recognition, machine translation and medical diagnostics.

An invertible neural net (INN) was trained to add colour to grayscale images. Which one is the original image? Answer at the bottom of the page. Image adapted from: [1]

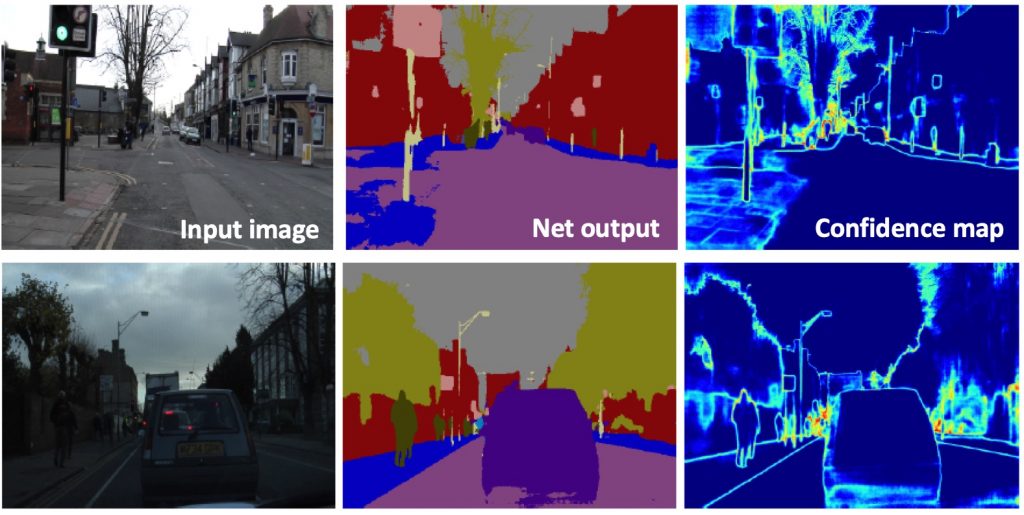

Critical application for neural networks: Decide where a car can drive or not. Confidence estimation is critical to make the right decisions. Image adapted from: [2]

- How can we design deep networks under given constraints?

- (How) Can we be confident in the answers given by a network?

- Can we explain the behaviour of a network?

The questions are tackled using the new unconventional framework of transport theory: We treat the input and output of a network as probability distributions. This enables an easier mathematical and algorithmical approach. It is realised in the form of Invertible Neural Networks (INNs).

Literature

[1] L. Ardizzone, J. Kruse, C. Lüth, C. Rother, and U. Köthe. Guided image generation with conditional invertible neural networks. arXiv:1907.02392, 2019.

[2] Kendall, A., and Gal, Y.. What uncertainties do we need in bayesian deep learning for computer vision?. In Advances in neural information processing systems 2017 (pp. 5574-5584).

The original bird is the one all the way to the right.