Abstract:

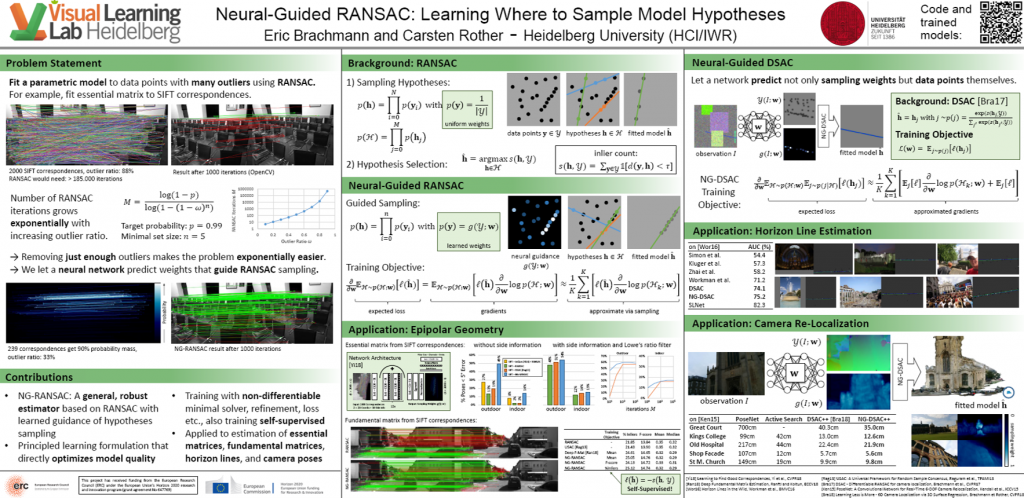

We present Neural-Guided RANSAC (NG-RANSAC), an extension to the classic RANSAC algorithm from robust optimization. NG-RANSAC uses prior information to improve model hypothesis search, increasing the chance of finding outlier-free minimal sets. Previous works use heuristic side-information like hand-crafted descriptor distance to guide hypothesis search. In contrast, we learn hypothesis search in a principled fashion that lets us optimize an arbitrary task loss during training, leading to large improvements on classic computer vision tasks. We present two further extensions to NG-RANSAC. Firstly, using the inlier count itself as training signal allows us to train neural guidance in a self-supervised fashion. Secondly, we combine neural guidance with differentiable RANSAC to build neural networks which focus on certain parts of the input data and make the output predictions as good as possible. We evaluate NG-RANSAC on a wide array of computer vision tasks, namely estimation of epipolar geometry, horizon line estimation and camera re-localization. We achieve superior or competitive results compared to state-of-the-art robust estimators, including very recent, learned ones.

Poster:

Results:

Estimation of epipolar geometry:

Estimation of horizon lines:

Code:

NG-RANSAC for estimation of essential or fundamental matrices: https://github.com/vislearn/ngransac/

NG-DSAC for horizon line estimation: https://github.com/vislearn/ngdsac_horizon

NG-DSAC for camera re-localizaton: https://github.com/vislearn/ngdsac_camreloc

Note: NG-RANSAC has also been extended to CONSAC (CVPR 2020) for multi-model fitting (paper, code)

Publication:

E. Brachmann and C. Rother, “Neural-Guided RANSAC: Learning Where to Sample Model Hypothesis”, ICCV 2019. [pdf]